Algorithm created to analyse large single-cell sequencing datasets

Posted: 20 April 2021 | Victoria Rees (Drug Target Review) | No comments yet

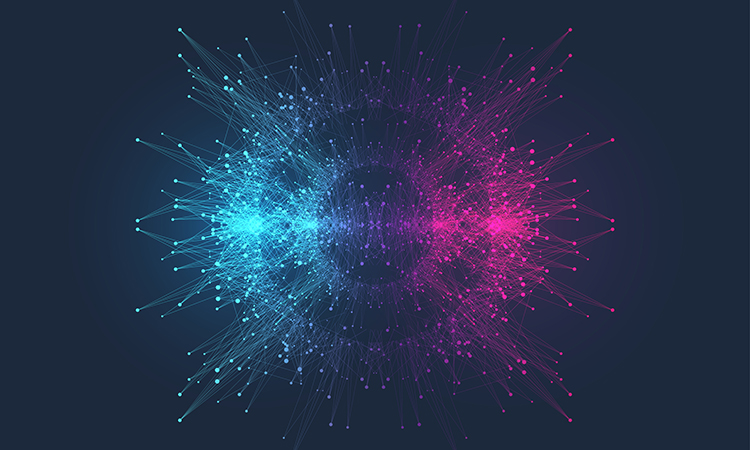

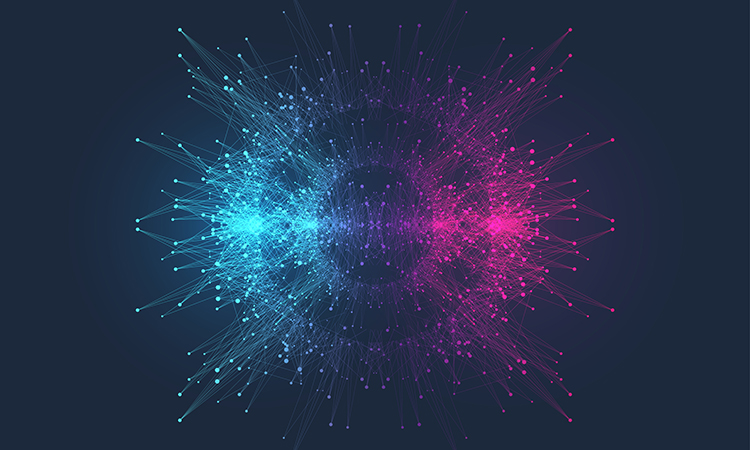

An algorithm which continuously processes new data has been developed to allow researchers to access and analyse single-cell sequencing information.

A newly developed algorithm uses online learning to provide a way for researchers around the world to analyse massive sets of single-cell sequencing data using the amount of memory found on a standard laptop computer. The technology was developed by a team from the University of Michigan, US.

“Our technique allows anyone with a computer to perform analyses at the scale of an entire organism,” said Dr Joshua Welch, one of the lead researchers. “That is really what the field is moving towards.”

The team demonstrated their proof-of-principle using datasets from the US National Institute of Health’s (NIH) Brain Initiative, a project aimed at understanding the human brain by mapping every cell.

According to the researchers, typically, for projects like this one, each single-cell dataset that is submitted must be re-analysed with the previous datasets in the order they arrive. The new approach allows new datasets to the be added to existing ones, without reprocessing the older datasets. It also enables researchers to break up datasets into so-called mini-batches to reduce the amount of memory needed to process them.

“This is crucial for the sets increasingly generated with millions of cells,” Welch said. “This year, there have been five to six papers with two million cells or more and the amount of memory you need just to store the raw data is significantly more than anyone has on their computer.”

Welch likens the online technique to the continuous data processing done by social media platforms, which must process continuously-generated data from users and serve up relevant posts to people’s feeds.

“Here, instead of people writing tweets, we have labs around the world performing experiments and releasing their data,” said Welch. “Understanding the normal compliment of cells in the body is the first step towards understanding how they go wrong in disease.”

The findings are described in Nature Biotechnology.

Related topics

Analysis, Bioinformatics, Informatics, Next-Generation Sequencing (NGS), Sequencing

Related organisations

Michigan University

Related people

Dr Joshua Welch